The [Almost] Great Internet Crash: 2014-08-12 is 512K Day

Abstract:Since the creation of computers, people had been taking computer equipment and making them attach through different means. Proprietary cables and protocols were constantly being designed, until the advent of The Internet. Based upon TCP/IP, there seemed to be little to limit it's growth, until the 32 bit address range started to "run out" when more people in more countries wanted to come on-line. On August 12, 2014, an event affectionately referred to as "512K Day" had occurred, a direct result of the IPv4 hacks to keep the older address scheme alive until IPv6 could be implemented.

History:

The Internet was first created with the Internet Engineering Task Force (IETF) publishing of "RFC 760" on January 1980, later to be replaced by "RFC 791" on September 1981. These defined the 32 bit version of TCP/IP was called "IPv4". During the first decade of The Internet, addresses were allocated in basic "classes", according to network size of the applicant's needs.

As corporations and individuals started to use The Internet, it was realized that this was not scalable, so IETF published "RFC 1518" and "RFC 1519" in 1993 to break the larger blocks down into more fine-grain slices for allocation, called Classless Inter-Domain Routing (or "CIDR"... which was subsequently refreshed in 2006 as "RFC 4362".) Network Address Translation ("NAT") was also created.

The Private Internet Addresses were published as part of "RFC 1918" in February 1996, in order to help alleviate the problem of "sustained exponential growth". Service Providers used NAT and CIDR to continue to facilitate the massive expansion of The Internet, using private networks hidden behind a single Internet facing IP Address.

In 1998, the IETF formalized "IPv6", as a successor protocol for The Internet, based upon 128 bits. The thought was providers would move to IPv6 and sun-set IPv4 with the NAT hack.

|

| [Example of a private network sitting behind a public WAN/Internet connection] |

Routing and system vendors had started supporting IPv6, but the vast majority of users continue to use CIDR and NAT hack to run the internet. The Internet, for the most part, had run out of IPv4 Addresses, called Address Exhaustion.

The IP address space is managed by the Internet Assigned Numbers Authority (IANA) globally, and by five regional Internet registries (RIR) responsible in their designated territories for assignment to end users and local Internet registries, such as Internet service providers. The top-level exhaustion occurred on 31 January 2011.[1][2][3] Three of the five RIRs have exhausted allocation of all the blocks they have not reserved for IPv6 transition; this occurred for the Asia-Pacific on 15 April 2011,[4][5][6] for Europe on 14 September 2012, and for Latin America and the Caribbean on 10 June 2014.Now, over a decade later, people are still using IPv4 with CIDR and NAT, trying to avoid the inevitable migration to IPv6.

|

| [Normal outage flow with an unusual spike on 2014-08-12] |

Warning... Warning... Will Robinson!

People were well aware of the problems with people using CIDR and NAT - address space would continue to become so fragmented over time that routing tables would eventually hit their maximums, crashing segments of The Internet.

Some discussions started around 2007, with how to mitigate this issue in the next half-decade. It was known that there was a limited number of routes that routing equipment can handle.

...this _should_ be a relatively safe way for networks under the gun to upgrade (especially those running 7600/6500 gear with anything less than Sup720-3bxl) to survive on an internet with >~240k routes and get by with these filtered routes, either buying more time to get upgrades done or putting off upgrades for perhaps a considerable time.

On May 12, 2014 - Cisco published a technical article warning people of the upcoming event.

As an industry, we’ve known for some time that the Internet routing table growth could cause Ternary Content Addressable Memory (TCAM) resource exhaustion for some networking products. TCAM is a very important component of certain network switches and routers that stores routing tables. It is much faster than ordinary RAM (random access memory) and allows for rapid table lookups.

No matter who provides your networking equipment, it needs to be able to manage the ongoing growth of the Internet routing table. We recommend confirming and addressing any possible impacts for all devices in your network, not just those provided by Cisco.

On June 9, 2014 - Cisco published a technical article 117712 on how to deal with the the 512K route limit on some of their largest equipment... when the high-speed TCAM memory segment overflows.

Cisco's solution will steal memory from IPv6 and MPLS labels, but allocate up to 1 Million routes.When a route is programmed into the Cisco Express Forwarding (CEF) table in the main memory (RAM), a second copy of that route is stored in the hardware TCAM memory on the Supervisor as well as any Distributed Forwarding Card (DFC) modules on the linecards.

This document focuses on the FIB TCAM; however, the information in this document can also be used in order to resolve these error messages:%MLSCEF-SP-4-FIB_EXCEPTION_THRESHOLD: Hardware CEF entry usage is at 95% capacity for IPv4 unicast protocol %MLSCEF-DFC4-7-FIB_EXCEPTION: FIB TCAM exception, Some entries will be software switched %MLSCEF-SP-7-FIB_EXCEPTION: FIB TCAM exception, Some entries will be software switched

On July 25, 2014 - people started reminding others to adjust their routing cache sizes!

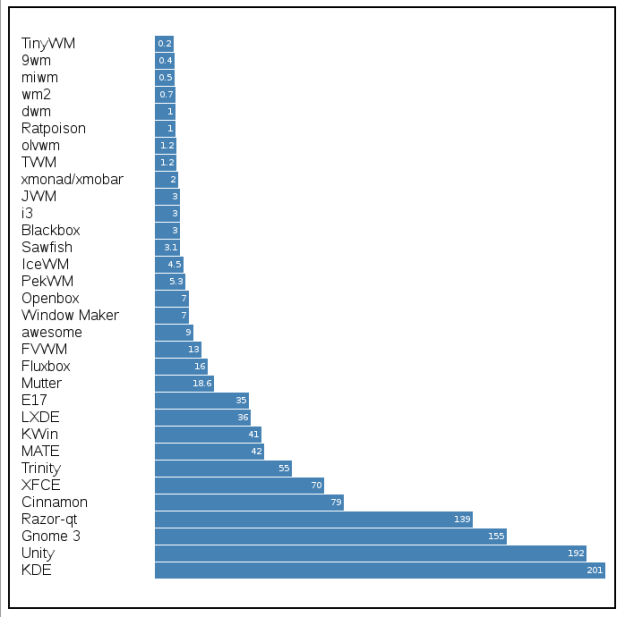

As many readers on this list know the routing table is approaching 512K routes.How do they know? Well, common people have an insight into this through the "CIDR Report"... yes, anyone can watch the growth of The Internet.

For some it has already passed this threshold.

|

| [PacketLife.net warning on 2014-05-06 of the 512K limit] |

The Day Parts of The Internet Crashed:

Cisco published a Service Provider note "SP360", to note the event.

Today we know that another significant milestone has been reached, as we officially passed the 512,000 or 512k route mark!Our industry has known this milestone was approaching for some time. In fact it was as recently as May 2014 that we provided our customers with a reminder of the milestone, the implications for some Cisco products, and advice on appropriate workarounds.

Both technical journals and business journals started noticing the issue. People started to notice that The Internet was becoming unstable on August 13, 2014. The Wall Street Journal published on August 13, 2014:

The problem also draws attention to a real, if arcane, issue with the Internet's plumbing: the shrinking number of addresses available under the most popular routing system. That system, called IPv4, can handle only a few billion addresses. But there are already nearly 13 billion devices hooked up to the Internet, and the number is quickly growing, Cisco said.

Version 6, or IPv6, can hold many orders of magnitude more addresses but has been slow to catch on. In the meantime, network engineers are using stopgap measures

The issue was inevitable, but what was the sequence of events?

|

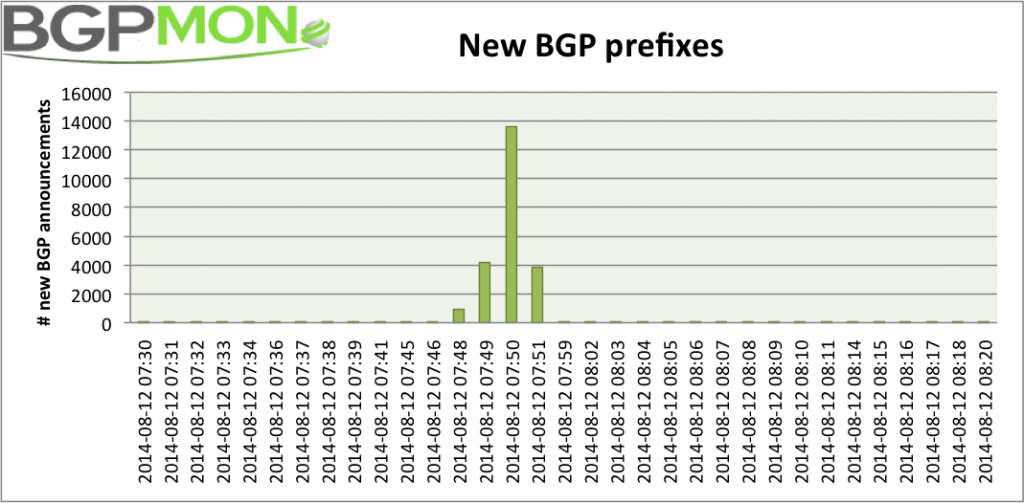

| [BGP spike shown by GBPMon] |

Apparently, Verizon released thousands of small networks into the global routing tables

So whatever happened internally at Verizon caused aggregation for these prefixes to fail which resulted in the introduction of thousands of new /24 routes into the global routing table. This caused the routing table to temporarily reach 515,000 prefixes and that caused issues for older Cisco routers.Whether this was a mistake or not is not the issue, this situation was inevitable.

Luckily Verizon quickly solved the de-aggregation problem, so we’re good for now. However the Internet routing table will continue to grow organically and we will reach the 512,000 limit soon again.

In Conclusion:

The damage was done, but perhaps it was for the best. People should be looking at making sure their internet connection is ready for when it happens again. People should be asking questions such as: "why are we still using NAT?" and "when are we moving to IPv6?" If your service provider is still relying upon NAT, they are in no position to move to IPv6, and are contributing to the instability of The Internet.